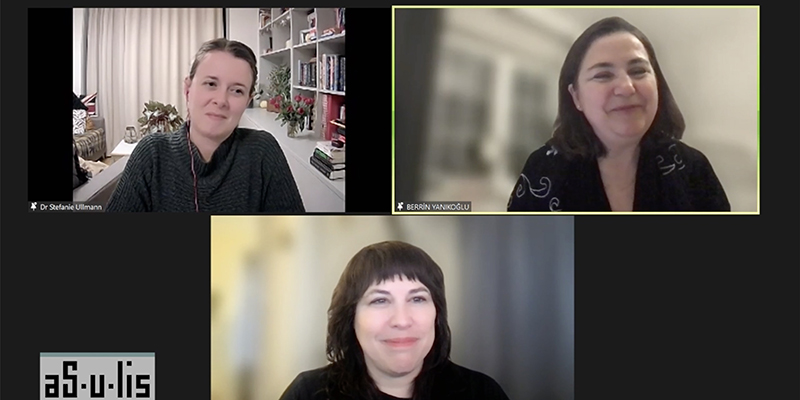

The online talk "Artificial intelligence and hate speech: Opportunities and risks" was held, moderated by Professor Berrin Yanıkoğlu and included Claudia von Vacano from Berkeley D-Lab and Stefanie Ullmann from Centre For Research in The Arts, Social Sciences And Humanities as a speaker on January 25, 2022.

Claudia von Vacano and Stefanie Ullmann talked about the projects they carried out within the scope of hate speech detection studies and the advantages and disadvantages of hate speech detection with artificial intelligence.

Claudia von Vacano started her speech by talking about the increasing hate speech in the USA, and emphasized the link between hate speech and hate crimes that leads to genocide. Talking about hate speech studies at the Cornell Center for Social Sciences, Vacano said; she expressed the deficiencies in the conceptualization or theorizing phases, that the analyses made in machine learning models remain in the binary structure.

She shared an approach that would divide the discourses into various compartments at the conceptual level on how data-intensive social sciences can be brought to life.

In her works, Vacano stated that the identification of the target group exposed to hate speech, the theorizing of counter speech, and how different items can be listed on a scale; she mentioned that they mapped the structures in discourses ranging from hate speech to threats, legitimation of violence and genocide. Vacano stated that they are trying to understand what kind of discourse is rather than whether there is hate speech in multiple datasets consisting of Youtube, Twitter and Reddit platforms, using a multidimensional measurement model.

Stefanie Ullmann started her speech by talking about the “Giving Voice to Digital Democracies” project and interdisciplinary working group and continued by explaining the scope of her work. She touched upon the questions about approaches to hate speech and how counter speech can be automated. Ullmann, mentioning about the problems of the current detection problems in hate speech classification, emphasized that as binary classification/labeling is often involved, the language of datasets, data sizes, labor-intensive processes and datasets are relatively limited compared to what artificial intelligence can do today. She also emphasized the influence of human moderators and algorithms in decision making and that the social media companies have different guidelines/policies. She continued by mentioning the quarantine method. Explaning how the different methods and versions are used in quarantining the online hate speech work, she mentioned about "hate meter" (Hate O'Meter) tool and the ability for users to create individual guide sets. In the last part of her speech, she touched upon the fact that hatred/humiliation discourses are produced by using different images and texts together. Sharing her prevision that multi-modal hate speech will increase in the future, Ullmann concluded her speech by mentioning that the use of hateful expressions will continue to be a problem based on the high accuracy rate in developed systems.

Speaking of differences between two projects in the Q&A part of the speech, Vacano mentioned the distinction between the terms “detection” and “measurement” of hate speech and that different models will be used instead of the binary system in which different dimensions of hate speech are overlooked while making this distinction. She continued with the conceptualization of counter speech and its inclusion in the definition, approaches that need to be considered in order to be inclusive. Ullman, on the other hand, stated that the difficulties of conducting interdisciplinary work are a result of the newness of this field and that students who do not have experience in linguistics but who get more interested in this field, help them develop different perspectives.

Both speakers concluded their speech by mentioning the distinctions and threats between censorship and freedom of expression in the use of hate speech and artificial intelligence, ethical issues, approaches to bias, human moderators, the importance of diversity in making assessments, institutional difficulties in accessing datasets, the importance of interdisciplinary studies and collaborations in these studies.

Video of the online talk